Multi-Scale 3D World Generation

Multi-Scale 3D World Generation

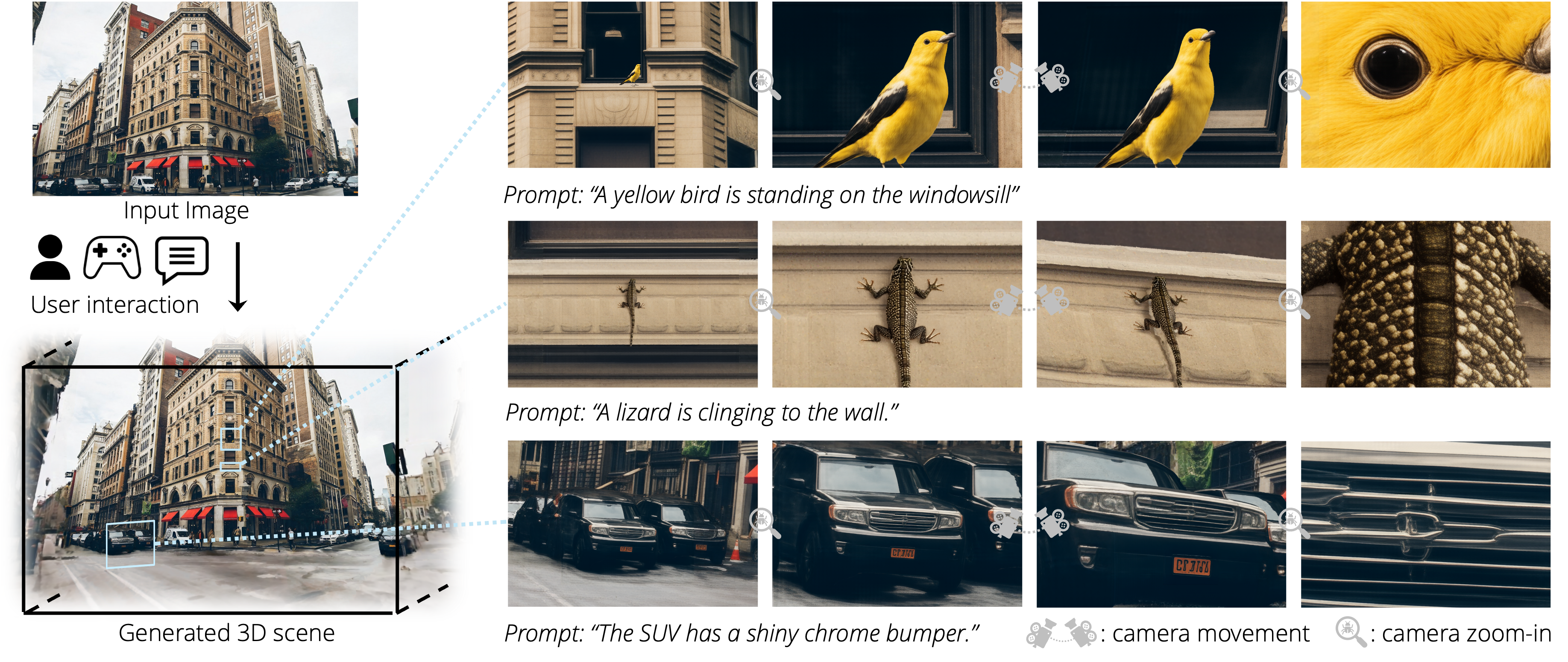

Generated Multi-Scale Worlds

Interactive Viewing

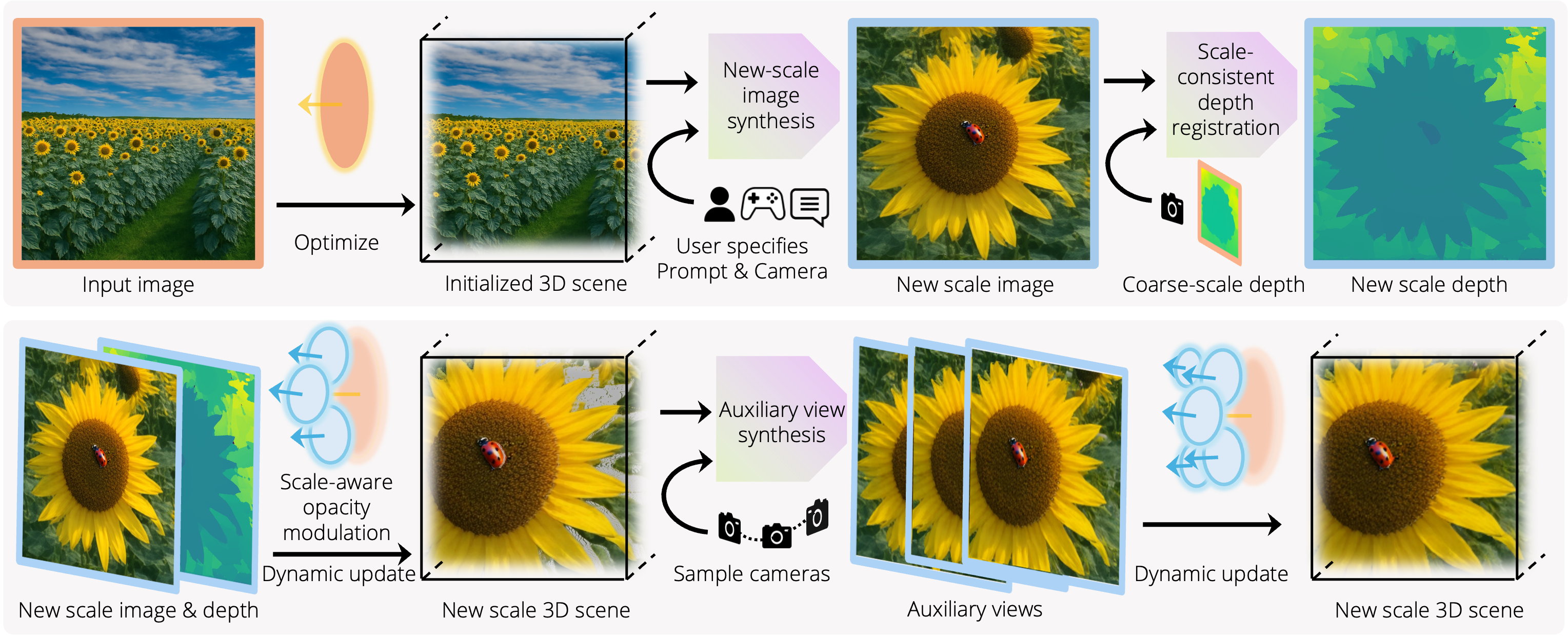

Approach

From an input image, we first reconstruct an initial 3D scene. Users specify prompts and camera viewpoints to generate finer-scale content. Our progressive detail synthesizer creates new-scale images, registers depth to maintain geometric consistency, and synthesizes auxiliary views for complete 3D scene creation. Our scale-adaptive Gaussian surfels enable dynamic updates without re-optimization, seamlessly integrating new content while preserving real-time rendering.

BibTeX